뉴스노믹스 김지혜 기자 |

The 22nd session of Cultural Talk for Diversity (C Talk) was held on October 26, 2025, under the theme “AI For All.”

Since its founding in 2023, this monthly forum has brought together individuals from diverse cultural and professional backgrounds to explore global issues surrounding diversity and inclusion.

This session focused on the intersection of artificial intelligence and society, questioning whether AI is truly inclusive—or if it risks reinforcing existing inequalities.

Two distinguished speakers, Daniela Draugelis, an intercultural trainer and coach, and Janith Dissanayake, a technology researcher and consultant, shared their insights on how AI can become both a bridge to innovation and a potential divider among societies.

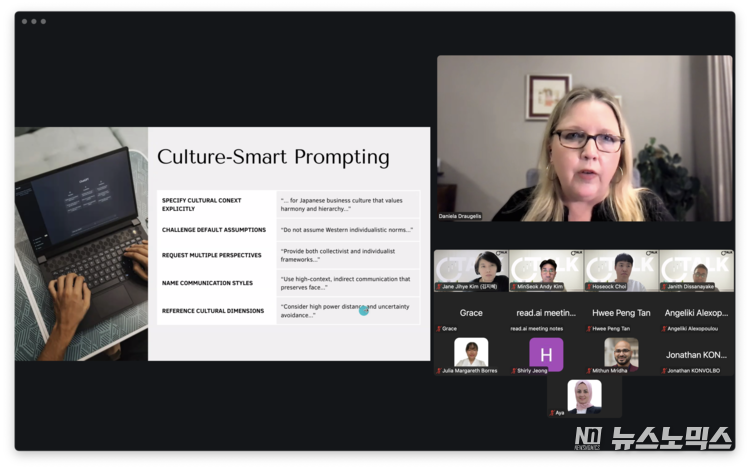

Daniela opened the session with a thought-provoking presentation titled “AI + CQ: Designing Culturally Intelligent AI.”

She discussed how Cultural Intelligence (CQ), the ability to understand and adapt across cultural contexts, can serve as a critical framework for designing inclusive and relatable AI systems.

Daniela emphasized that most AI systems today are shaped by Western, English-speaking, and industrialized perspectives, leading to what she described as “cultural colonialism” and “cultural washing.”

These terms refer to the tendency of technology to present itself as global and neutral while actually reflecting narrow cultural assumptions.

She argued that AI models often fail to account for diverse communication styles, cultural values, and social norms outside WEIRD (Western, Educated, Industrialized, Rich, and Democratic) societies.

By integrating CQ principles, she suggested, developers and intercultural professionals can help ensure that AI systems respect and reflect the diversity of human experience.Daniela encouraged participants to think critically about their own AI use in intercultural work.

She called for collaboration between AI specialists and cultural experts to build systems that are not only efficient but also empathetic and contextually aware.

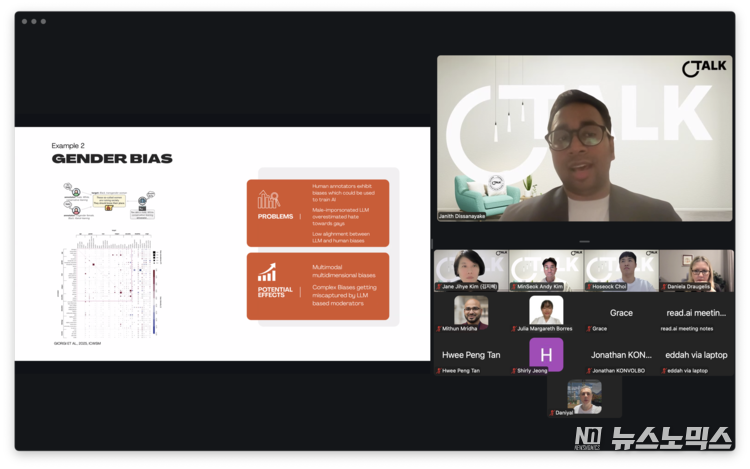

Following Daniela’s talk, Janith Dissanayake delivered an impactful presentation titled “AI: A Bridge or a Gap Widener.” He began with a warning: “Know the tools you use before the tools use you.”

Referring to recent studies, Janith noted that the rapid adoption of large language models and the dominance of a few major providers have created growing concerns about monopolization and inequality in the AI industry.Janith explained that most AI models are trained on data from developed nations, where men have greater online presence than women, resulting in gender, cultural, and socioeconomic biases embedded in AI systems.

He shared examples of these biases.He illustrated how AI bias manifests in everyday applications—from recruitment algorithms that favor male profiles to image analysis systems that misjudge women’s age and credibility, and moderation tools that misinterpret social attitudes toward marginalized groups.

Together, these examples reveal how hidden biases in training data can shape machine decisions in subtle but harmful ways.He also highlighted the growing economic divide in AI access, noting that the affordability of advanced AI tools remains a major concern for developing nations and low-income users.

He questioned whether subscription-based AI services, which often require significant financial investment, are truly accessible to everyone. “If AI becomes a privilege of the rich,” he cautioned, “it will widen global inequalities instead of closing them.”

He concluded by warning about the increasing concentration of power within the AI industry, noting that a handful of major players now dominate both technology and infrastructure.

Without effective international regulation, he cautioned, the world risks allowing a few entities to shape the digital future for everyone.

The session ended with an engaging group discussion on the future of culturally sensitive AI.

Participants shared their perspectives on how intercultural expertise can help address algorithmic bias and how education systems must evolve to prepare future generations for an AI-driven world.Both speakers emphasized that technology must remain human-centered, with empathy and cultural understanding guiding its evolution. “AI should serve as an assistive partner, not a replacement for human context,” Daniela noted.

Cultural Talk For Diversity is a supportive community where people exchange insights on culture and DEI, creating spaces where everyone helps each other to learn more, share knowledge, and grow together through meaningful projects.

Cultural Talk Group:

https://www.linkedin.com/groups/14364384/

For those interested in joining future Cultural Talk for Diversity events or collaborating as a speaker or participant, feel free to reach out:

janekimjh@naver.com

Monthly sessions are held via Zoom and are open to individuals passionate about diversity, inclusion, and cross-cultural understanding.Stay tuned for the next session and be part of a global conversation that bridges cultures and fosters mutual respect.